The term artificial intelligence (AI) was first coined by John McCarthy, the father of AI, in 1955. By 2024, on the back of digital innovations during the Covid pandemic, AI had become an integral part of our lives. Insurance companies are now using AI to improve claim settlement; lenders are using it to better match borrowers to loan products; and AI-powered chatbots are answering customer queries, among others.

While AI has made processes convenient for both providers and receivers, it has also become a deadly weapon in the armoury of online fraudsters.

Says Ankit Ratan, co-founder and CEO, Signzy, a digital banking infrastructure provider: “The evolution of technology is like a double-edged sword: increased sophistication leads to a rise in AI-powered scams. This is a growing concern, particularly in India, where consumer data and personal information are directly impacted.”

A recent study by Forrester Consulting and commissioned by Experian, a leading global information services provider, sheds light on a troubling surge in AI-related frauds in India. A significant 64 per cent of respondents said they experienced increase in frauds over the past year and another 67 per cent said they were struggling to keep pace with the rapid pace of evolution of such frauds and threats.

Typically, fraudsters impersonate high-trust relationships—such as bank or financial institution, close relative, friend or boss—and create a sense of urgency or panic that forces you to act in a way you normally wouldn’t. “We have seen several cases of senior citizens, Gen-Xers, and millennials being victims of payment fraud. People across demography are equally susceptible to such scams,” says Adhil Shetty, CEO, BankBazaar. However, senior citizens could be more vulnerable to such fraud, especially if they are not conversant with modern technology.

How AI Aids Fraud

Fraudsters often use AI to facilitate phishing attacks and identity thefts.

By blending fake and genuine information, fraudsters create synthetic identities that are difficult to distinguish from real ones. They can use these identities to open accounts and apply for loans. Additionally, AI can craft personalised phishing messages by analysing public data and past interactions, increasing the likelihood that recipients will fall for the scam,” says Ratan.

India is leading the way in AI-powered voice scam victims. About 83 per cent have lost money after being tricked by cloned voice

These methods are dangerous because they bypass traditional security measures. So, advancements in AI-driven attacks underscore the importance of implementing sophisticated detection systems.

There is also an element of social engineering to be understood here. “Fraudsters target vulnerable people, such as the elderly. They use deepfake images, stolen identities, and voice cloning to impersonate a friend or family member. They create a scenario of urgency or alarm and can extract money before the victim can think of any wrongdoing,” says Gurudutt Bhobe, vice president, engineering and AI, IDfy, an integrated identity verification platform.

In fact, it’s a combination of AI and offline techniques that are the most dangerous. Social engineering, synthetic ID, and phishing attacks prey on human psychology. The quality of fake content is expected to improve, and with increasing digitisation of onboarding processes, the depth of checks needs to get better. Fraudsters will either impersonate individuals to gain access or use fake means to infiltrate systems and commit frauds.

Adds Bhobe: “Financial frauds can be broadly categorised into two types: those targeting institutions and those that rob individuals. It is generally easier to target individuals due to their vulnerabilities, while institutional frauds often involve significant insider collusion.”

Types Of AI Frauds

Here’s a look at some of the new-age AI frauds that have gained traction in 2024.

Voice Cloning: This could be an instance where a CFO may be asked to make large payments based on a call from their CEO only to later discover that it was a cloned voice. “In a business context, voice cloning is often utilised in phishing scams, where fraudsters pretend to be a trusted individual to deceive their targets,” says Bhobe. The same may happen in the retail context as well. The idea is that if individuals get a call from a familiar voice, they may act or divulge sensitive information without being suspicious.

A McAfee report found India leading the way in AI-powered voice scam victims, with a staggering 83 per cent losing money after being tricked by a cloned voice. Nearly 47 per cent of Indian adults have encountered or know someone who fell for a voice scam, almost double the global average. The report also highlights that over 69 per cent of Indians struggle to distinguish between a real and AI voice.

Deep Fakes: A deepfake is a synthetic media creation, often a video or image, where AI replaces or manipulates a person’s likeness to create realistic but fake content. According to a McAfee report, in India, 38 per cent of people encountered deepfake frauds. Of these, 18 per cent claimed to have been personally affected by these frauds, with financial losses and the compromising of personal information being frequent results.

Both voice and video cloning can be clubbed under deepfakes. Says Shetty: “Deepfakes and voice cloning leverage AI to create convincing but fake audio and visual content that closely imitates real people. These are then used for fraudulent purposes, such as impersonating individuals in videos or phone calls to deceive victims into revealing sensitive information or transferring funds.”What’s In The Works

What’s In The Works

Financial institutions are doing their bit. Most payments in India require additional authentication beyond just passwords, such as OTPs sent to mobiles. For instance, you need a PIN to transact on UPI, and any card-based transaction has a second level of OTP-based authentication.

“In addition, financial institutions are also increasingly enhancing the security of their communication channels with customers through the use of encrypted emails and secure portals for exchanging information. They are also investing in customer education campaigns to raise awareness about potential frauds, teaching customers how to recognise phishing attempts and secure their own data,” says Shetty.

The Reserve bank of India (RBI) actively runs public awareness campaigns about safe banking practices through various media channels, encouraging customers to be vigilant and informed. Going forward, experts feel that they will have to be more innovative to tackle increasing fraud (see AI vs AI).

“One of the steps the government should take is to complete the setting up of a National Financial Information Registry (NFIR), which can be a public infrastructure for fraud management. The NFIR is intended to be a centralised database that collects and manages financial information across various institutions in India,” says Shetty.

Such a consolidated database of financial data from multiple sources should be able to provide a comprehensive view of financial activities, making it easier to spot inconsistencies and patterns indicative of fraudulent activities. Moreover, such a database can facilitate real-time monitoring of financial transactions, enabling quicker detection and response to suspicious activities, and potentially stopping fraud before significant damage is done.

The proposed Digital India Trust Agency (DIGITA) that is expected to regulate and certify digital lending platforms may address the concerns around fraudulent digital lending apps. By regulating digital lending apps, DIGITA can ensure that these platforms adhere to strict operational and ethical guidelines, thus reducing the risk of fraudulent practices. DIGITA’s certification mechanism can also instil greater trust among users by separating fraudulent or predatory lenders from genuine players.

What Should You Do?

As financial institutions and authorities figure out how to tackle fraud, for now, the onus lies on the customer to stay vigilant.

Most of such frauds have a pattern. Every scam employs a few specific tactics to make their requests seem legitimate. This typically involves some form of impersonation—posing as the representative of your bank, a financial service institution, or even customer support; sending emails or messages that prompt you to take certain actions, coupled with a sense of urgency or alarm, and so on.

Says Shetty: “The resulting turn of affairs compels you to take action, such as making a payment or divulging confidential information, that you wouldn’t normally share or do under regular circumstances.”

It is important to realise that any bank or financial institution will never call you and ask you to take certain actions, such as revealing your personal details, making a payment, and so on. “Understanding the signs of fraud and the importance of secure online practices, and staying informed about the latest security threats and protective measures can help consumers recognise and avoid sophisticated scams, reducing their vulnerability,” says Shetty.

As digitisation evolves, it will be a constant catch-up game that customers, authorities and fraudsters will play. To tackle these challenges, collaboration between governments, regulators, financial institutions, and tech companies will be essential. In the meantime, what will help you is exercising caution by thinking before acting, and by staying informed and updated about the latest threats.

AI Vs AI

Using AI tech to fight AI frauds

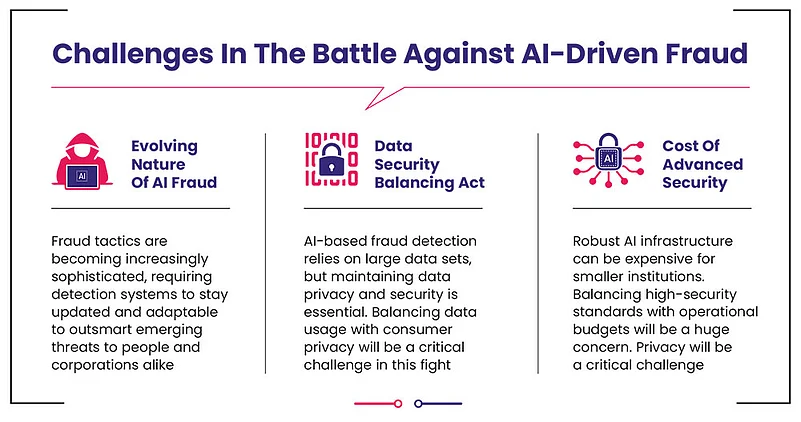

To combat AI fraud, AI is the tool that businesses would need to use. According to the study conducted by Forrester Consulting, nearly 67 per cent of businesses believe that AI/ML (machine learning) -powered solutions will drive the future of fraud prevention. Transparent ML models, continual automatic model retraining, and real-time analysis are crucial for staying ahead of evolving fraud.

The study underscores the importance of AI and ML technologies in the future of effective fraud prevention. These advanced solutions can swiftly analyse extensive datasets, identify anomaliestly, and unveil fraud patterns. This proactive approach not only protects businesses from significant losses but also strengthens the integrity of financial ecosystems.

Says Manish Jain, country MD, Experian India. “Unlike traditional methods, AI excels in swift adaptation and real-time analysis, actively defending against ever-changing threats. Unlocking the power of data and ML, AI becomes a custodian at digital gateways, thus ensuring consumer protection and preventing substantial losses for businesses. It plays a pivotal role in fortifying security, protecting businesses from significant financial risks, and building a robust credit ecosystem.” So, in the end, one would need to fight fire with fire, as the saying goes.