AI can read your face, mimic your voice, and rewrite your words in seconds. A few seconds of audio from a WhatsApp note can be used to clone your voice; an old clip from a conference reshaped into a high-definition video of you endorsing a Ponzi scheme, and a stolen profile photo to advertise a fake wealth advisory site. But these aren’t crude fakes: the lip-sync is near perfect, the body language convincing.

Among the many dangers of AI is the advent of new forms of fraud and convincing deepfakes that can dent your reputation and rob you of your credentials or investors their money.

Rajesh Krishnamoorthy, an adjunct professor at the National Institute of Securities Markets (NISM) and an independent director in the alternate investments space, who became a victim of a deepfake recently, says that with the advent of AI, now it is very easy to create morphed images and videos.

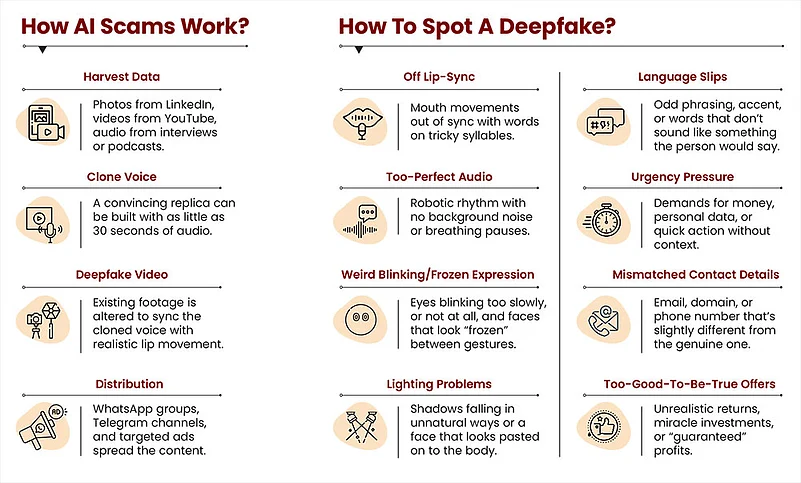

Cybersecurity professionals say AI-powered scams often employ a mix of stolen material, synthetic voices, and emotional triggers.

Rise In Deepfakes

On a global level, deepfake videos of Ukrainian President Volodymyr Zelenskyy urging soldiers to surrender have surfaced. In India, AI is used in matrimonial frauds, political smear campaigns, blackmail, and to lure investors into fraudulent schemes.

The Reserve Bank of India (RBI) had to issue a public advisory in November 2024 when deepfake videos began circulating showing the RBI governor pitching investment schemes. RBI said these videos were fake, its officials do not endorse or advise on such schemes, and the public should ignore them.

The government’s cybersecurity agency, CERT-In, also sounded alarms about deepfake-based fraud and the risk of generative AI. It issued an advisory in November 2024 highlighting how AI-generated media was being weaponised for impersonation scams, disinformation, and social engineering attacks.

According to a report, The State of AI-Powered Cybercrime: Threat & Mitigation Report-2025, cybercriminals in Karnataka alone swindled nearly `938 crore between January and May 2025, an exponential leap from previous years.

Victims On Both Sides

Rajesh realised he had become a victim when a colleague forwarded him a link to a website called RiaFin, urging him to “check this out”. The site was offering suspiciously high returns, but what surprised him wasn’t the scheme, but his own photograph staring back at him. His professional headshot accompanied a text that seemed to endorse the platform. The more he clicked, the worse it got: fabricated testimonials, fake credentials, all in his name.

Rajesh, who had never heard of the company, has served a legal notice to the platform. “I reported it to my social circles through a post on a professional network. I also sent individual messages to many. As I am also a director, the compliance department of the company reported it to both the cybercrime cell and also the regulator as we are a regulated entity in the capital markets in India,” he says.

The website vanished within days, but not before Rajesh’s reputation was put at risk.

For Rajesh, personal worry is secondary. “I worry about the people who might get scammed, although I am concerned about my own reputation, too, which has taken so many decades to build. I am confident though, as we have a justice system that is very clear that every individual has the right to control the commercial use of their identity. Indian courts have consistently held that personality rights encompass protection of name, image, likeness, voice and other distinctive personal attributes from unauthorised commercial exploitation,” he adds.

Such deepfakes are used for luring people with fake videos or quotes from an influential personality.

In Pune, a 59-year-old man lost `43 lakh between February and May 2025 after being lured by what the police later confirmed was a deepfake video featuring Infosys founder N. R. Narayana Murthy and his wife, author and philanthropist Sudha Murthy endorsing an AI-powered stock trading platform, promising exceptional returns.

In November 2024, two Bengaluru residents lost Rs 83-95 lakh combined after watching deepfake investment pitches featuring Murthy and Mukesh Ambani. A month before, telecom tycoon Sunil Bharti Mittal revealed that scammers had cloned his voice to call a senior executive in Dubai to transfer a large sum. The voice was very precise, but the executive’s alertness prevented the scam.

In July 2025, doctored videos of Prime Minister Narendra Modi and Union Minister of Finance Nirmala Sitharaman promoting platforms like “Immediate Fastx” were circulating on Facebook. The Press Information Bureau and State Bank of India issued warnings they were fake.

Why Deefakes Are Dangerous?

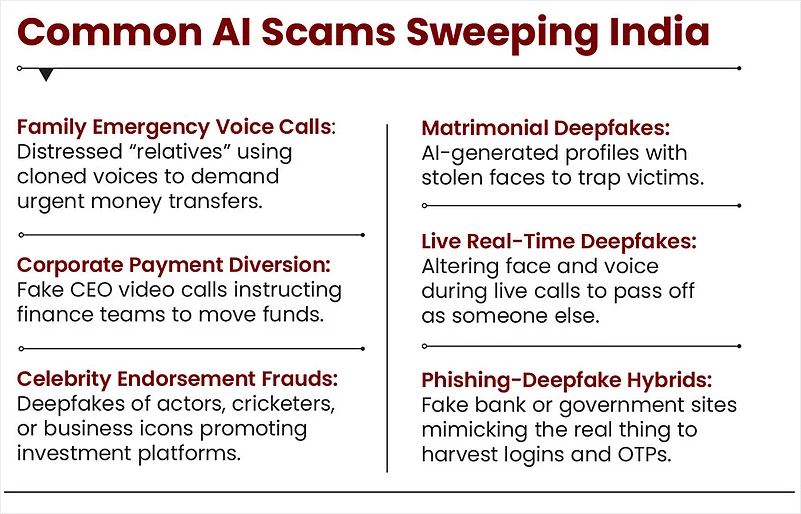

At present, deepfakes are being used not just to mimic influential personalities, but also persons in position of authority and family (see Common AI Scams Sweeping India).

What makes these scams so dangerous is that they don’t need to hack your passwords or bank accounts. They just need to hijack trust and faces of those you trust.

Devesh Tyagi, CEO of National Internet Exchange of India (NIXI), a not-for-profit organisation under the Ministry of Electronics and Information Technology (MeitY), says: “Scammers use familiar voices, faces and brands to lower your guard. A fake website can mirror your bank’s design perfectly and a cloned voice can speak fluently in your mother tongue. Urgent messages push people to act before thinking.

Jiten Jain, director at Voyager Infosec an information security services provider, says, “We are seeing a sharp rise in frauds involving fake video calls, AI-generated voices and deepfake videos. These attacks succeed because they target our emotions and trust. The best defence is awareness: verify when money or sensitive data is involved.”

India’s multilingual Internet makes these even more dangerous.

IPS officer and cyber forensic specialist Shiva Prakash Devaraju recalls a case where a businessman nearly transferred money to what he thought was his son’s account. The son seemingly asked for funds for medical bills in London, though he was in Bengaluru. Devaraju adds: “What deceived the father was the AI voice cloning. The scammer had lifted less than a minute of the son’s voice from an Instagram reel, fed it into a voice synthesis tool, and in under an hour had a near-perfect replica.”

According to Devaraju, there are two main factors that makes voice and video cloning easy. One, abundant raw material (voices and photos) available on social media. Two, plug-and-play AI tools that can produce a voice clone or deepfake video within minutes.

How To Avoid These Scams?

The simplest way to stay clear of AI scams is to pause and not react immediately. Don’t trust a voice or video just because it looks and sounds familiar, always double-check through another channel you know is genuine. If a “relative” calls in distress, call them back on their usual number. If your “CEO” emails you for an urgent transfer, confirm in person or over an official line. Check website addresses letter by letter, look for verified domains and never click on links sent over random messages. Scammers want you to act fast, so verify and then act.

“Recognising these tricks; slowing down your response can make it tougher for fraudsters to pass off as someone you trust,” says Tyagi.

Says Devaraju: “Scams succeed when the victim feels emotionally cornered and time-pressed. If you can pause, verify, and consult, you take away 90 per cent of the scammer’s advantage.”

Tyagi says NIXI has also tightened domain registration rules, mandating know-your-customer (KYC) for all .IN and . domains and introducing secure zones like .bank.in and .fin.in. “Protect your digital identity; use strong passwords, two-factor authentication, and confirm requests through official channels.”

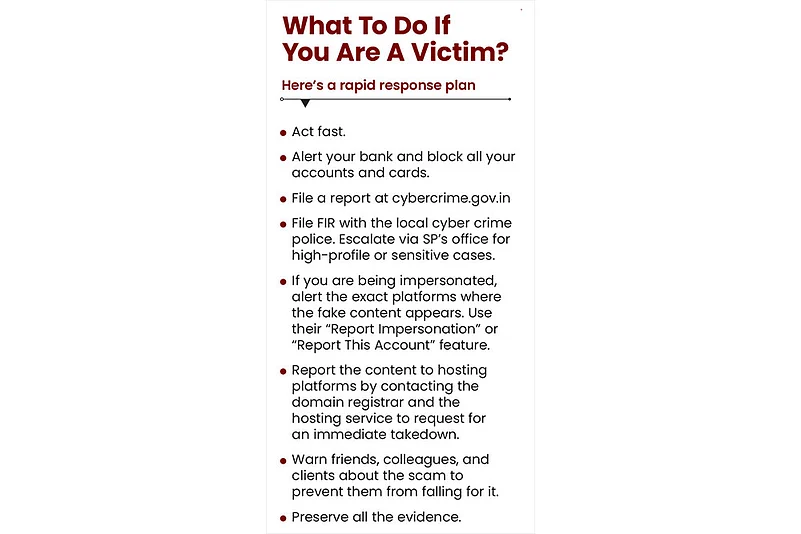

If you do become a victim, act fast (see What To Do If You Are A Victim?). Says Tyagi: “I have personally seen cases where a deepfake video was removed in under 6 hours because the victim acted within minutes.”

India’s IT Act and Penal Code provisions cover impersonation and fraud, but both predate generative AI. The proposed Digital India Act is expected to address deepfakes directly, along with platform responsibility and detection standards. In the meanwhile, the tech savvy can look out for others. For many older people, the Internet is a mix of convenience and confusion. So, the younger can check on their parents, grandparents, or elderly friends and neighbours. In many cases, this patient guidance can prevent a lifetime of regret.

shivangini@outlookindia.com